Compiling Scala as a single binary for AWS Lambda

Lion Ralfs — Posted onAWS Lambda Functions for JVM-Languages notoriously have bad cold start durations. This blog post is the result of an experiment where I attempted to use GraalVM to compile my Lambda code (Scala) to a single binary, which led me down the rabbit hole of having to write a custom Lambda runtime to be compiled along with the handler code.

Anyone that works with JVM based Lambda Functions knows that there unfortunately exists a significant timing overhead for functions that are “cold”. I’ve personally seen environments where you can observe cold start durations of multiple seconds in production, which is unacceptable in most scenarios, especially where end-users have to wait as a consequence.

GraalVM (and specifically the native-image project) allows you to take a “fat” jar and compile it to a standalone executable, promising substantial performance gains. As such, I attempted to try it out for a simple Scala Lambda Function.

Writing a custom runtime

Since you can’t just give AWS your handler as an executable file and tell them: “Hey, here is my Lambda Function, please execute it for every invocation”, the first step for me was to figure out what a custom runtime is and how to write and embed it so AWS can deal with it.

AWS offers their own runtimes for the most popular programming languages. This is most likely what you already use when you deploy a Lambda. You upload a zip with your code, then tell AWS that it’s supposed to use Java (or Node.js, Python, Go, …) and you’re good to go. However, AWS also offers a way for you to specify and implement a custom runtime. You then deploy your Lambda handler code along with the custom runtime and are free to use whatever programming language you want. In my case, I want to execute a compiled binary, but we’ll get to that after I’ve explained what it takes to implement my own runtime.

When a Lambda is first invoked, AWS notifies the runtime to get ready and “bootstrap” itself. I’m calling it bootstrapping, since AWS requires a script named “bootstrap” as part of the Lambda runtime. This script is supposed to initialize the Lambda handler and set up a polling loop to continuously poll for invocations.

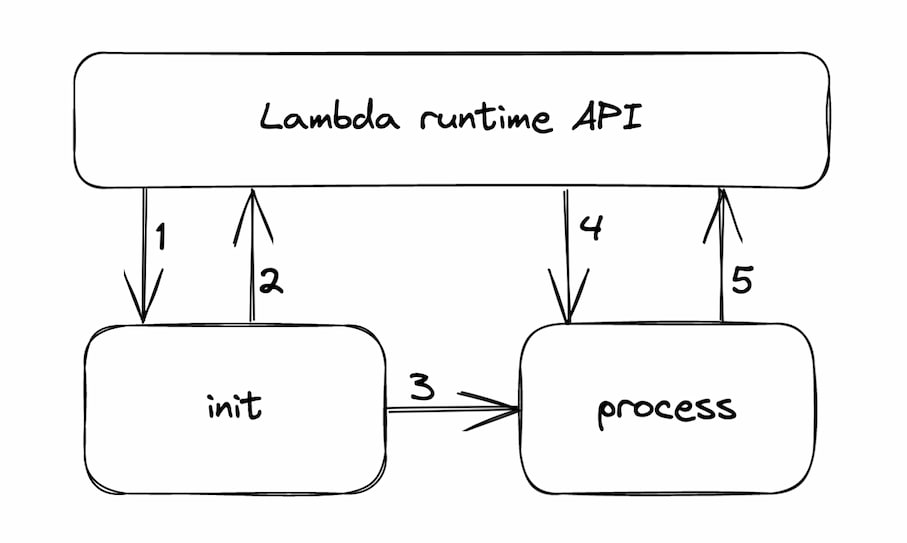

The entire process is illustrated in the figure above. In step 1, upon creating a new Lambda instance, the outside world (AWS) runs your bootstrap script, where you’re expected to set up your handler. In my case, this meant instantiating the handler class. You’re able to access some configuration via environment variables. I’ve listed a few notable ones:

_HANDLER: The location of the handler that you configured. This is essentially the value that you set as theHandlerproperty when you deploy a function, for example:example.Handler::handleRequest. Your runtime can now locate the handler class and create an instance. It also knows that it’s supposed to call thehandleRequestmethod for every invocation.AWS_LAMBDA_RUNTIME_API: A string containing the host and port of the runtime. In later stages, you’re expected to send HTTP requests against this API.AWS_LAMBDA_FUNCTION_NAME,AWS_LAMBDA_LOG_GROUP_NAME,AWS_LAMBDA_FUNCTION_MEMORY_SIZE, etc: Metadata about the Lambda Function that you can use to instantiate a class implementing the Context interface.

If the instantiation fails for whatever reason, you can report the error to the runtime API via a POST request to /runtime/init/error (step 2). Otherwise, you’re transitioning to the processing phase (3), where you continually send GET requests to /runtime/invocation/next and pass its response to the handler (4). The response body to the GET request contains the data that the Lambda Function was invoked with. And yes, polling via while(true) { … } is fine. You now have all the required parts (input+context) to invoke the handler method. Whatever the handler returns, you then POST to /runtime/invocation/<requestId>/response and let AWS handle the rest. The requestId is passed as a response header in step 4 (Lambda-Runtime-Aws-Request-Id).

Bundling the runtime and the handler

Now that I have a runtime and the handler that I want to deploy, all that’s left is to set up a process to build a jar and create an executable file.

The problem with GraalVM (native-image) is that it only builds for the system that it’s currently running on. As I’m using a Mac and expecting the code to run on Amazon Linux 2, I needed a workaround. Luckily, there’s a docker image for Linux that already has native-image installed, so I just run the command inside the docker container by utilizing docker volumes to pass the jar into the container and retrieve the resulting binary.

Another thing to be aware of is runtime reflection. My custom runtime uses Java’s runtime reflection API on the handler class and method (passed via the _HANDLER handler environment variable, see above). GraalVM does dead code elimination and throws away the entire handler class because it statically determined that it’s not being used. Adding the class to a file reflect-config.json prevented this from happening.

To complete the deployment, I have the compiled binary along with a shell script called bootstrap in a directory. The naming is important here, as AWS looks for the bootstrap file as the custom runtime's entrypoint. My bootstrap file simply executes the binary along with some logging.

#!/bin/sh

set -euo pipefail

currentDate=`date +"%Y-%m-%d %H:%M:%S,%3N"`

echo "[$currentDate]: bootstrapping (script)"

./lambda-binary

I'm sure I could've also just named my complied binary bootstrap, but this works as well. Now I can zip this directory, use it as the Lambda Code and set the Lambda Runtime to provided.al2.

Conclusion

Through this little experiment I learned a lot of details of how Lambda (kind of) works behind the scenes and what it takes to implement a custom Lambda runtime. After deploying the code into the cloud, I also added some load to the Function to see its cold & warm execution times. The function itself doesn't do any work apart from logging some parameters. The test wasn't in any way scientific but gave me a good idea of what kind of times I'm dealing with:

The first row are the non-coldstart timings in milliseconds, the second row shows the coldstarts.

Here are the results for the same handler, but using the AWS provided runtime (java 17):

All the code along with some additional links is in this repo if you want to take a look at it yourself.